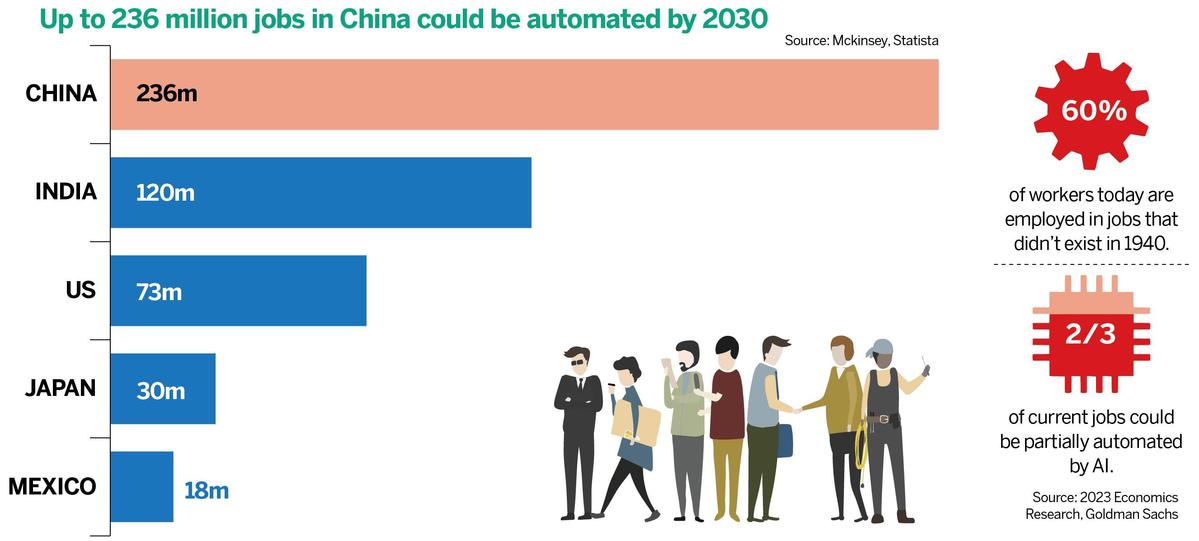

Could supersmart machines replace humans?

Hallucinating AI

Are the supersmart AI self-learning systems able to outwit their human creators? Strange incidents are surfacing as AI "hallucinations" where AI is generating seemingly plausible results that are false. No one can explain how an engine designed to search for answers, can invent its own fake responses that are logical and believable.

Dmitry Tokar, co-founder of Zadarma, argues that problems could occur if emotion-feeling models are developed. "Emotion is what makes a person dangerous, and AI is supposed to be just knowledge put together. As long as AI is not programmed with emotions that can lead to uncontrollable actions, I see no danger in its development."

Dr Hinton who resigned his position from leading AI at Google to speak freely to the world at large, warns that we are at an inflection point in AI development that requires oversight, transparency, accountability, and global governance, before it is too late. He said he hopes corporations can shift investment and resources that are now 99-percent development against 1-percent safety auditing, to a balanced 50:50 share.

- Various activities held to welcome upcoming Laba Festival

- Beijing plans further expansion of its world-class metro network

- Xi leads China's diplomacy to usher in new chapter in turbulent world

- NEV surge contributes to record air quality improvement in Beijing

- China's Global Governance Initiative receives positive feedback at forum

- China's Xizang sees steady tourism growth in 2025